Photorealistic 3D reconstructions (NeRF, Gaussian Splatting) capture geometry & appearance but lack physics. This limits 3D reconstruction to static scenes. Recently, there has been a surge of interest in integrating physics into 3D modeling. But existing test‑time optimisation methods are slow and scene‑specific. Pixie trains a neural network thatmaps pretrained visual features (i.e., CLIP) to dense material fields of physical properties in a single forward pass, enabling real‑time physics simulations.

PixieVerse is a large-scale synthetic benchmark for visual-physics learning. It features thousands of high-quality assets that span diverse semantic classes and material behaviours, each annotated with dense physical properties. The dataset is labeled automatically via a VLM pipeline we developed.

Assets

0

Super-classes

0

Material Models

0

Annotations

E, ν, ρ, ID

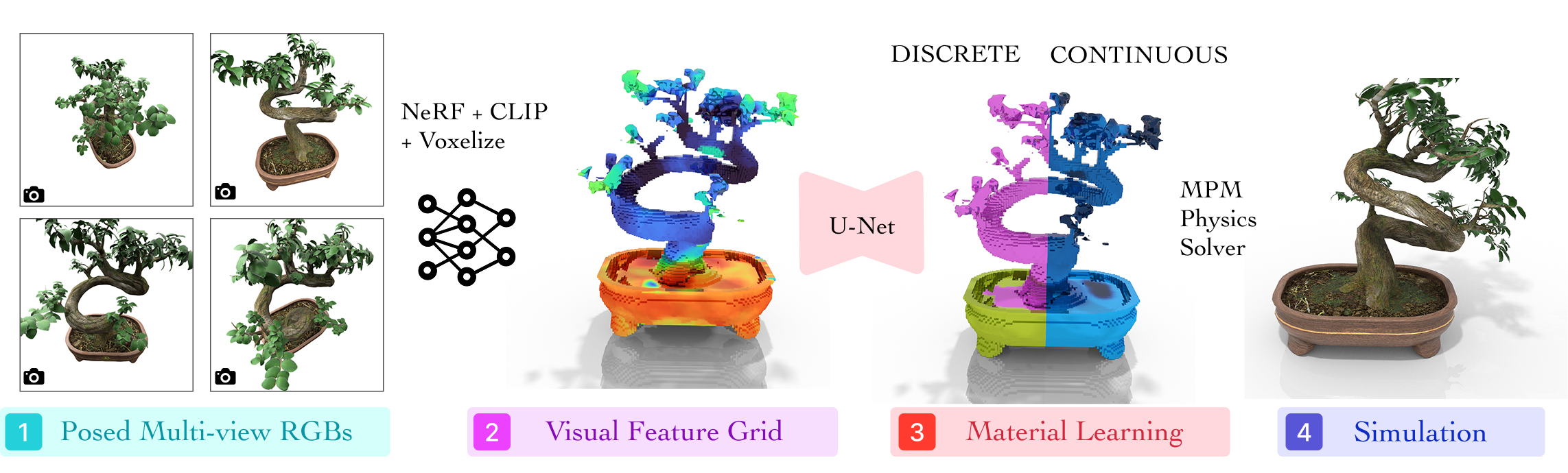

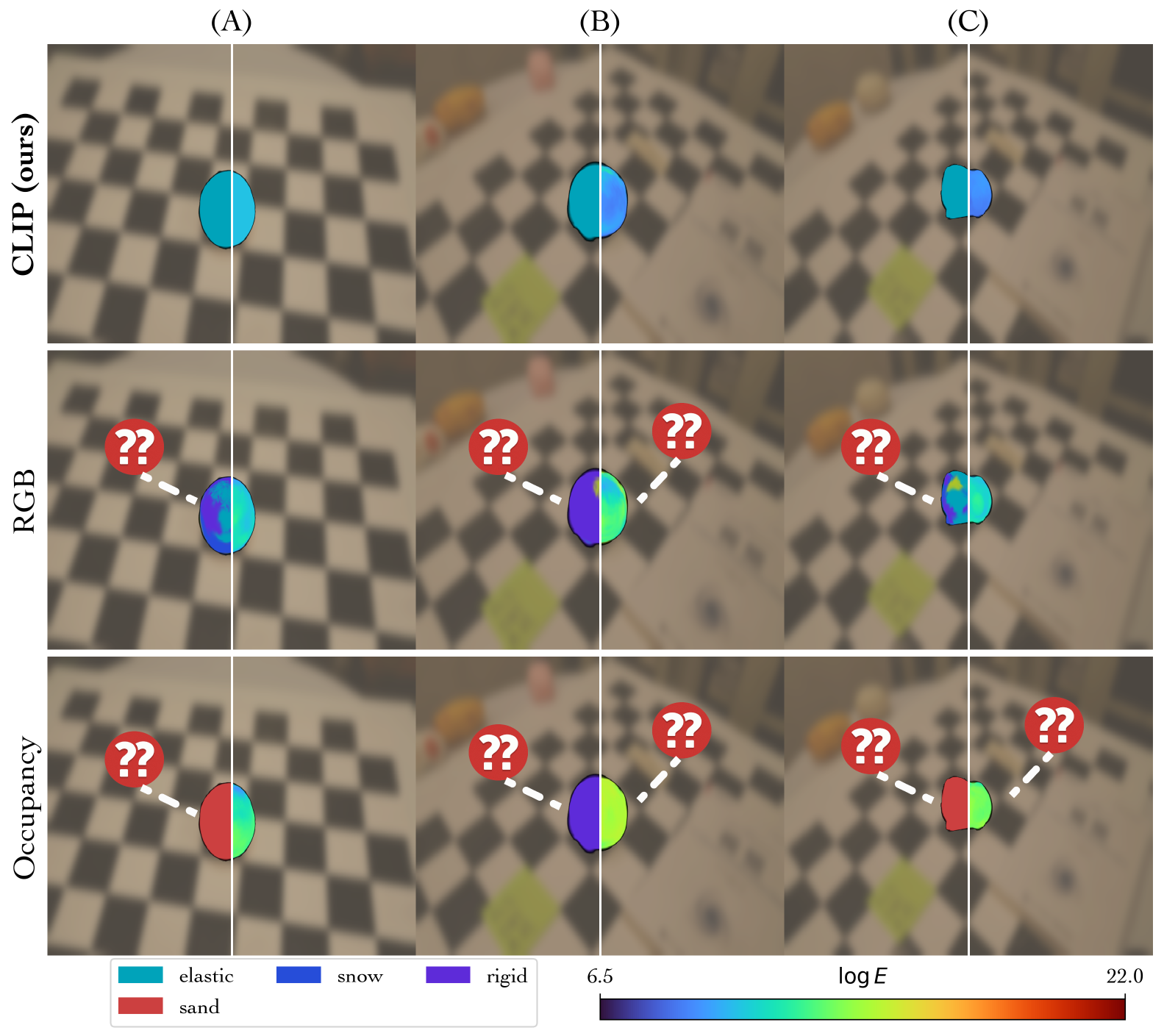

Multi‑view posed RGB images are encoded by a NeRF with distilled CLIP features yielding a 3D feature grid. A 3D U‑Net predicts material fields that are transferred onto Gaussian splats and simulated with an Material Point Method (MPM) Physics solver to produce 3D physics simulations.

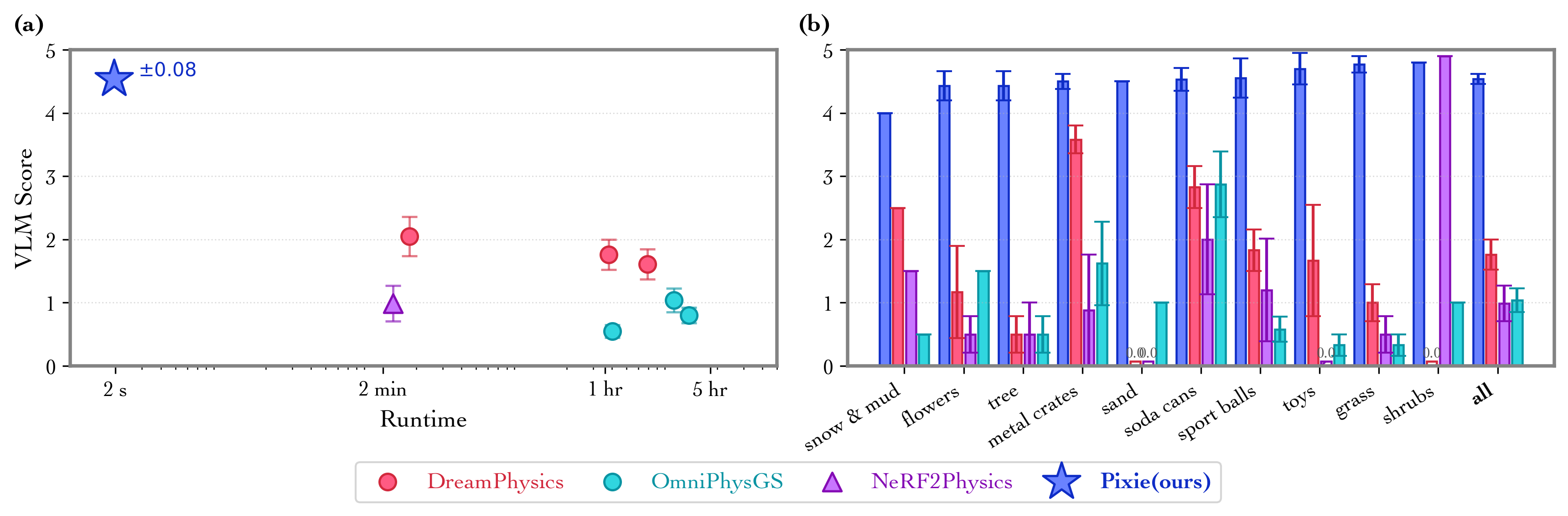

VLM Score vs Runtime: On the PixieVerse benchmark, Pixie outperforms DreamPhysics,

OmniPhysGS

and NeRF2Physics by 1.46-4.39x in

Gemini‑Pro realism while running 10³× faster.

VLM Score vs Runtime: On the PixieVerse benchmark, Pixie outperforms DreamPhysics,

OmniPhysGS

and NeRF2Physics by 1.46-4.39x in

Gemini‑Pro realism while running 10³× faster.

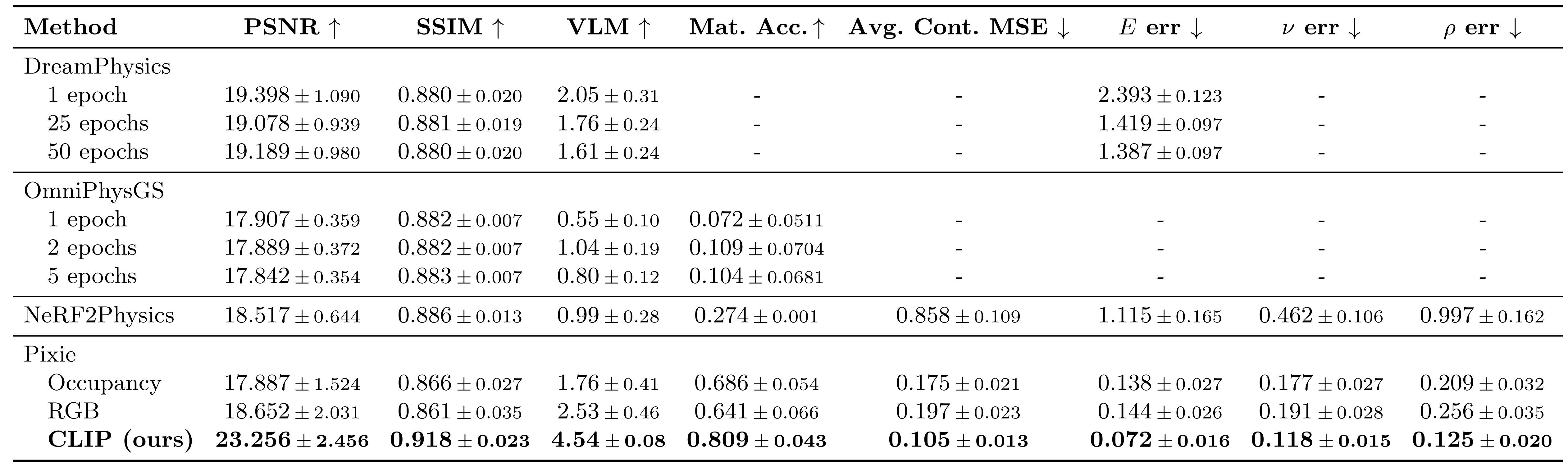

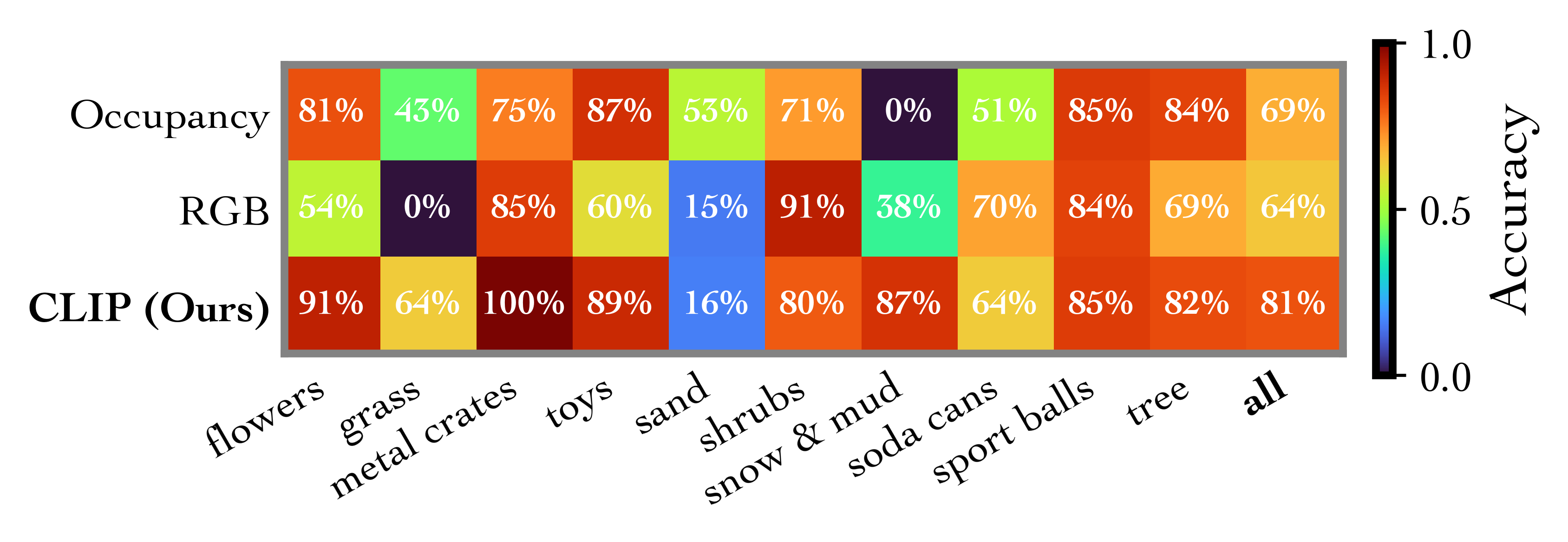

More quantitative results! We also report perceptual metrics (PSNR, SSIM) against the

reference videos in

PixieVerse, the VLM Gemini scores, and five other metrics our

method optimizes including discrete material accuracy and continuous errors over E, ν, ρ. Standard

errors and 95% CI are also included, and best values are bolded. Pixie is by far the

best

performer.

1.46-4.39x improvement in VLM score and 3.6-30.3% gains in PSNR and SSIM against competitors!

More quantitative results! We also report perceptual metrics (PSNR, SSIM) against the

reference videos in

PixieVerse, the VLM Gemini scores, and five other metrics our

method optimizes including discrete material accuracy and continuous errors over E, ν, ρ. Standard

errors and 95% CI are also included, and best values are bolded. Pixie is by far the

best

performer.

1.46-4.39x improvement in VLM score and 3.6-30.3% gains in PSNR and SSIM against competitors!

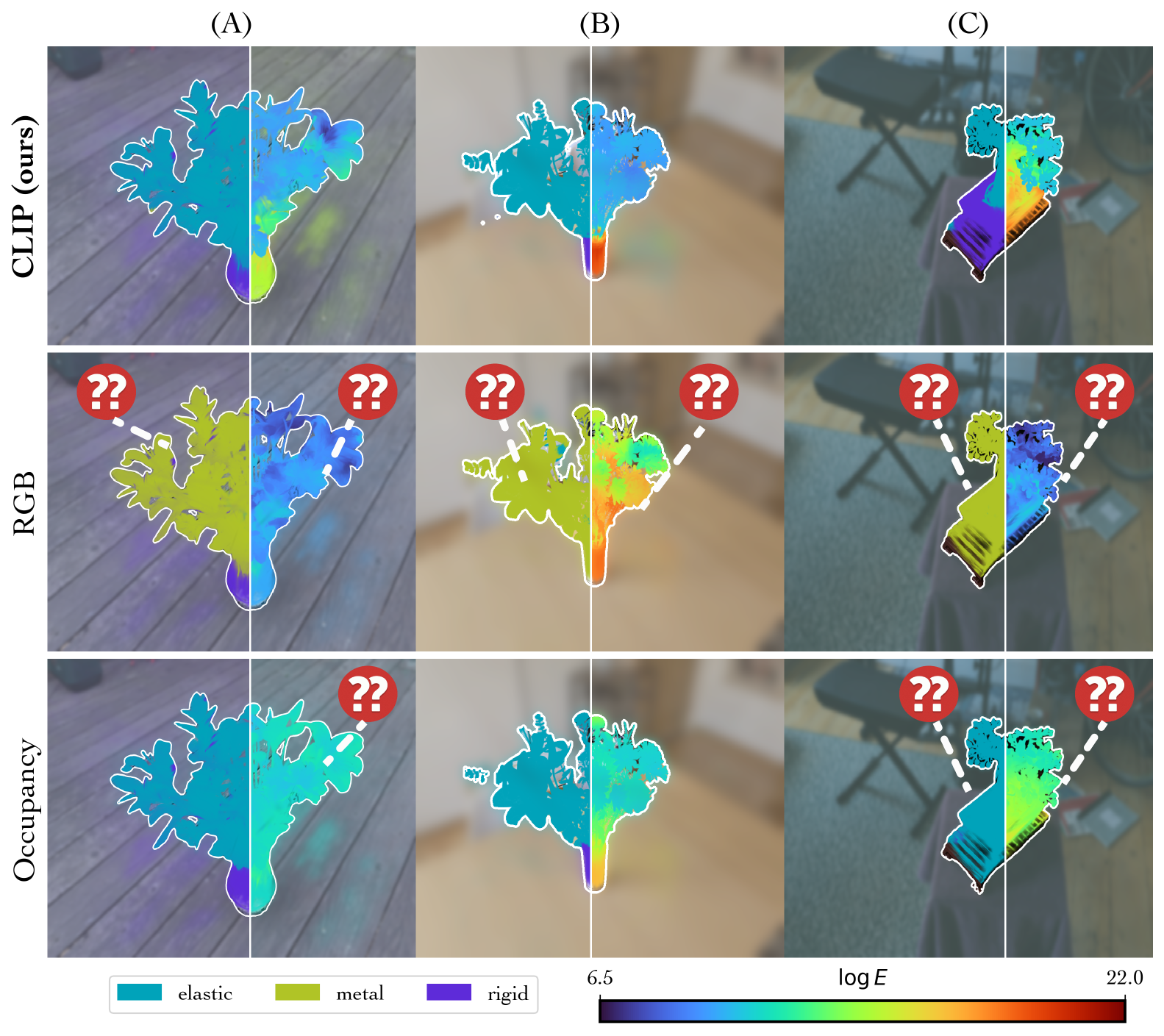

Interactively explore Pixie's material predictions on captured NeRF scenes. Drag the slider to compare input RGB with predicted physics fields, switch feature views, and pick different scenes via thumbnails.

@article{le2025pixie,

title={Pixie: Fast and Generalizable Supervised Learning of 3D Physics from Pixels},

author={Le, Long and Lucas, Ryan and Wang, Chen and Chen, Chuhao and Jayaraman, Dinesh and Eaton, Eric and Liu, Lingjie},

journal={arXiv preprint arXiv:2508.17437},

year={2025}

}